【ICSGPS 2026】第三届智能电网与电力系统国际会议在南宁圆满落幕

绿城春意浓,邕江聚能澜。在这承载着希望与新机的早春时节,第三届智能电网与电力系统国际会议(ICSGPS 2026)于2026年2月1日在中国·南宁隆重开幕。

本次会议由广西大学主办,浙江大学、上海交通大学、武汉大学、武汉理工大学、西南科技大学、东北电力大学、湖南大学、中南大学、西南石油大学、长安大学、爱迩思出版社(ELSP)、ESBK 国际学术交流中心以及AC学术平台联合支持。会议旨在汇聚全球电力能源、智能电网、电力电子及系统集成等领域的顶尖学者、行业专家与青年科研力量,围绕新型电力系统构建、可再生能源并网、电网数字化与智能化等关键议题展开深度交流,共同推动能源电力领域的科技创新与产业协同发展。

会议开幕式

1日上午,会议在南宁永恒朗悦酒店会议厅正式启幕。线上线下来自全球各地的数百位专家学者及青年学子齐聚一堂,共同见证了这一学术盛会的开启。大会荣誉主席、上海交通大学程浩忠教授代表组委会致开幕辞,主办方代表、广西大学覃程荣教授作欢迎致辞,仪式由广西大学陈碧云副教授主持。

▲上海交通大学 程浩忠教授致开幕辞

▲广西大学 覃程荣教授致欢迎辞

▲广西大学 陈碧云副教授主持会议

本次会议主会场邀请了4位学者作主旨报告,分别是:文福拴,浙江大学教授、IEEE Fellow;何怡刚,武汉大学教授、国家杰青、欧洲自然科学院外籍院士、英国皇家学会工艺院终身院士、中国电机工程学会会士;Mohamed Benbouzid,法国University of Brest教授、IEEE Fellow;黎静华,广西大学教授、国家级重大人才工程计划入选者、IET Fellow。报告与讨论涵盖电力市场、智能电网运行和维护、潮汐发电、电力系统响应控制等相关领域。

▲浙江大学 文福拴教授作主旨报告

▲武汉大学 何怡刚教授作主旨报告

▲University of Brest Mohamed Benbouzid教授作主旨报告

▲广西大学 黎静华教授作主旨报告

其后,由广西大学张镱议教授主持学者&院长论坛,会议邀请了下列嘉宾进行分享讨论:上海交通大学程浩忠教授,武汉理工大学周克亮教授,西华大学王涛教授,广西科技大学刘胜永教授。现场互动积极,专家与听众展开了深入的学术探讨。

▲学者&院长论坛

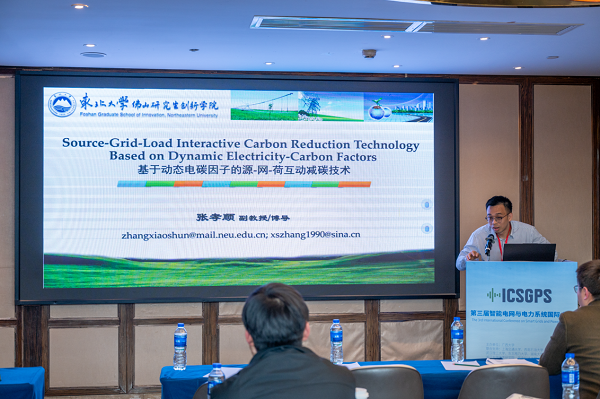

当日下午,大会进行了分会场报告,其中4位学者作特邀报告,分别是:武汉大学刘承锡教授,武汉理工大学黄云辉教授,东北大学张孝顺副教授,武汉大学赵志高副教授。来自多所高校和科研单位的学者分享了自己的最新研究进展。报告内容丰富,交流深入,进一步增强了学术界的互通与合作。

▲武汉理工大学 黄云辉教授作特邀报告

▲东北大学 张孝顺副教授作特邀报告

▲武汉大学 赵志高副教授作特邀报告

▲华南理工大学 钱瞳副教授作报告分享

▲西华大学 王涛教授作报告分享

▲广西科技大学 刘胜永教授作报告分享

▲分会场一现场

▲分会场二现场

▲分会场三现场

晚上,大会举行了颁奖晚宴,对会议支持单位以及在会议投稿、论坛组织及报告学者中表现突出的组织和个人进行了现场表彰。颁发了多个奖项,以鼓励青年学者的创新探索和卓越表现。

|  |

|  |

|  |

|  |

晚宴颁奖现场

在活跃融洽的氛围中,主办方表达了对下一届ICSGPS会议的美好展望,期待在未来构建更高层次的国际交流平台,进一步推动国内外相关领域的创新突破与跨界融合,共迎智能电网发展的新篇章。

【ICTEC 2026】交通工程与控制国际会议在湖南长沙成功召开

2026年1月30日至2月1日,由中南大学主办,ESBK国际学术交流中心、AC学术平台联合协办的交通工程与控制国际会议(ICTEC 2026)在湖南长沙隆重召开。大会通过主旨报告、专题研讨与成果展示等立体化交流模式,深度聚焦交通工程与控制领域面临的共性挑战、技术范式变革与产学研升级路径。

大会现场合照

1月31日上午,大会正式拉开帷幕。大会主席——东南大学张健教授致开幕辞,强调了本次会议在推动学术交流、促进科技合作方面的重要意义,并代表组委会对远道而来的与会者表达了诚挚敬意与热烈欢迎。

东南大学张健教授致开幕辞

会议荣幸邀请到多位在交通工程、控制领域具有重要影响力的专家学者出席并作主旨报告。其中包括:教育部长江学者,中南大学刘辉教授;东南大学教务处副处长张健教授;山东大学齐鲁青年学者吴建清教授。此外,会议还特别邀请到了空军工程大学刘棕成副教授为大会作专题报告。各位专家聚焦交通工程与控制前沿,展示了专业领域的最新研究成果与发展动向,现场交流深入、互动热烈,充分体现了学术共同体的思想活力与创新动能。

|  |

|  |

▲主会场主旨报告与特邀报告

在下午的分会场中,来自多所知名高校与科研单位的学者,依次报告分享了各自的最新研究成果。报告内容兼具理论深度与实践导向,现场学术氛围浓厚,提问环节互动活跃,充分体现了学界对关键问题的深入探索与创新思考。

|  |

|  |

|  |

▲分会场现场

大会颁奖晚宴上,组委会隆重颁授多项荣誉证书与奖章,嘉奖为本次会议作出卓越贡献的学者。他们的智慧与热忱,为盛会顺利推进奠定了坚实基础,更为学术交流注入了强劲能量。

|  |

|  |

▲颁奖晚宴现场

2026年交通工程与控制国际会议(ICTEC 2026)已圆满落幕。本次会议成功搭建起交通、控制领域的高水平交流平台,汇聚众多专家学者与研究骨干,有效促进了前沿理论探索、关键技术突破与产业实践应用的深度融合。组委会表示,未来将持续整合国际优质学术资源,深化多学科交叉创新与科研协作,并期待在下一届会议上与各界同仁再度聚首,共同擘画领域发展的新篇章。

【FICMSS 2026】第三届智能控制、测量与信号系统国际会议在南宁顺利落幕

1月23日至25日,由桂林理工大学主办,西安交通大学-中国核电工程有限公司核电智能决策与预测运营技术联合实验室、江苏科技大学、ESBK国际学术交流中心、AC学术平台共同支持的第三届智能控制、测量与信号系统国际会议(FICMSS 2026)在广西南宁顺利召开。来自国内外高校、科研院所及产业界的专家学者、青年研究者齐聚绿城,分享前沿成果、研判技术趋势,推动智能控制、测量与信号系统的产学研深度融合。

大会现场

1月24日上午,FICMSS 2026在南宁隆重开幕。主会场由天津大学周圆老师主持,首先,由大会主席河海大学费峻涛教授代表组委会致开幕辞,对会议的顺利召开表示祝贺,并向给予支持的各方专家学者致以诚挚感谢。随后,桂林理工大学党委副书记黎贞崇致欢迎辞,对远道而来的各位嘉宾表示热烈欢迎,并介绍了学校在智能控制与测量技术领域的最新发展情况。

▲费峻涛教授开幕致辞

▲黎贞崇副书记欢迎致辞

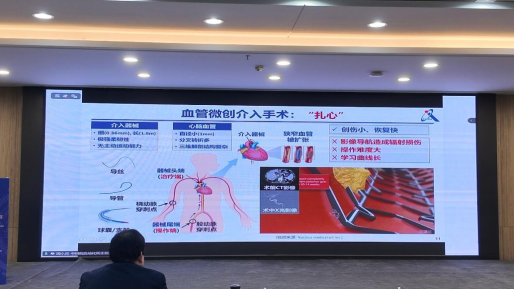

本次大会汇聚了智能控制、测量与信号系统领域的多位知名专家学者。上午的主会场邀请了西安交通大学程伟教授、中国科学院自动化研究所周小虎教授、河海大学费峻涛教授和哈尔滨工程大学高洪元教授分别作主旨报告,深入剖析了人工智能控制、先进传感技术、下一代信号处理系统等前沿技术在制造业中的融合应用与发展前景。

▲成玮教授报告 |  ▲周小虎教授线上报告 |

▲费峻涛教授报告 |  ▲高洪元副教授报告 |

除主会场报告外,当日下午大会同步举行学者报告和分会场交流,共计二十余场学术报告。与会学者围绕智能控制理论与系统、网络智能与网络控制、智能故障检测与诊断、测量信号处理与分析、高性能处理器与智能仪器、信号处理在移动通信中的应用、嵌入式系统与深度学习等热点议题展开深入研讨。来自多所高校和科研单位的报告人分享了最新研究进展,现场交流热烈,进一步促进了学术界与产业界的互通与合作。

▲分会场一现场

▲分会场二现场

12月13日晚,大会举行颁奖晚宴,为激励青年学者在智能控制、测量与信号系统领域持续创新,组委会对在会议中表现突出的单位和个人进行了现场表彰,为学科发展注入新生力量。

|  |

|  |

图组 颁奖晚宴现场

FICMSS 2026不仅是展示智能控制、测量与信号系统科研成果的重要平台,更是推动技术转化与学术合作的关键契机。随着人工智能、边缘计算、多源感知等技术的迅猛发展,传统测量控制技术正加速向智能化、网络化、集成化方向转型升级。FICMSS 2026的召开,为全体学者与产业界搭建了高水平的国际学术交流平台,促进了智能控制、测量与信号系统领域的深度融合与协同创新。

【HDIS 2025】第六届高性能大数据暨智能系统国际会议成功在无锡举办

2026年1月24日,由中国科学院半导体研究所、江南大学主办,无锡市梁溪区政府、无锡高新区管委会、澳门科技大学、天津理工大学、中铁设计机械动力设计研究院、爱迩思出版社(ELSP)、ESBK国际学术交流中心、AC学术中心协办的第六届高性能大数据暨智能系统国际会议(HDIS)在无锡顺利召开。本次会议得到无锡高新区管委会的大力支持。

大会以“高性能大数据,智能系统”为核心主题,汇聚国内外人工智能与大数据领域的知名专家和青年学者,围绕计算架构创新、数据智能挖掘、智能感知与自主决策系统等热点方向展开深度交流,共同推动高性能计算与智能技术融合创新,助力新一代信息技术产业发展。

会议现场合照

24日上午,大会在庄重而热烈的氛围中拉开帷幕。开幕式由大会程序主席中国科学院半导体研究所宁欣研究员主持。主办单位代表即大会主席江南大学吴小俊教授以及中国科学院半导体研究所李卫军研究员分别代表组委会向与会专家学者表示热烈欢迎,并对长期关心和支持会议发展的各合作单位致以诚挚感谢。

吴小俊教授代表主办单位致欢迎辞,对来自海内外的专家学者表示诚挚欢迎,并介绍了无锡在集成电路、智能制造、新一代信息技术及人工智能产业方面的发展基础与创新生态。他希望本次会议进一步促进学术界与产业界深度融合,推动关键核心技术突破和成果转化。

江南大学吴小俊教授致欢迎辞

李卫军研究员在致辞中回顾了HDIS会议的发展历程。自创办以来,会议始终聚焦高性能计算架构、大数据分析、智能系统设计等核心方向,逐步成长为具有较高国际影响力的学术交流平台。本届会议的召开将有助于进一步加强多学科交叉合作,推动高性能计算与人工智能技术协同创新。

中国科学院半导体研究所李卫军研究员致辞

本届大会主会场特邀多位国内人工智能、大数据与智能系统领域的知名专家作主旨报告。报告内容紧扣高性能计算、分布式智能、信息处理与模式识别等关键方向,系统展示了相关领域的最新理论成果与工程实践,充分体现了学科交叉融合与创新发展的鲜明特征。

北京大学博雅特聘教授,博士生导师,IEEE Fellow,2018年国家杰出青年基金获得者田永鸿教授作题为Beyond Multimodal Foundation Models: Practical Pathways and Challenges on the Road to World Models的主旨报告。

北京大学田永鸿教授作主旨报告

随后,上海交通大学集成电路学院(信息与电子工程学院),国家级高层次人才,IEEE Fellow,AAIA Fellow,中国电子学会会士、中国计算机学会(CCF)会士熊红凯教授作题为Intelligence and Mathematics: Posteriori and Priori的主旨报告。

上海交通大学熊红凯教授作主旨报告

重庆邮电大学副校长,教育部“长江学者”特聘教授李伟生教授作题为Multi-dimensional Registration and Multi-modal Fusion for Spinal Surgery Image Navigation的主旨报告。

重庆邮电大学李伟生教授作主旨报告

IAPR Fellow、AAIA Fellow、AIIA Fellow、NAAI 通讯院士、江南大学至善教授、研究生院院长吴小俊教授作题为Multimodal Visual Fusion and Enhancement: General Purpose Perspectives的主旨报告。

江南大学吴小俊教授作主旨报告

当日下午,大会共设立两个分会场。多项报告结合实际案例,展示了智能系统在复杂场景中的应用成效。论坛讨论环节互动频繁,围绕模型可靠性、安全性及工程部署难点展开深入交流,充分体现了学术研究与产业需求的紧密结合。青年学者和研究生积极参与成果展示,汇报内容紧贴学科前沿。现场提问踊跃、讨论深入,围绕实验设计、模型创新与工程实现等问题展开了充分交流,形成了浓厚的学术氛围。多位参会专家表示,这些研究成果体现了新生代科研力量的创新活力和扎实基础。

|  |

|  |

▲分会场一现场

|  |

▲分会场现场问答环节

|  |

|  |

▲分会场二现场

HDIS将继续秉持开放合作、交叉创新的理念,不断拓展国际合作网络,深化学科融合,打造更具影响力的高水平学术交流平台。组委会表示,下一届会议将进一步加强与产业界的联动,推动科研成果向实际应用转化,助力智能科技创新发展。

在与会专家学者的共同努力下,第六届高性能大数据暨智能系统国际会议圆满落下帷幕。

【RAITS 2026】机器人、自动化和智能交通系统国际会议在西安成功召开

1月23日至25日,机器人、自动化和智能交通系统国际会议(RAITS 2026)在西安隆重举行。本次会议由长安大学、道路施工技术与装备教育部重点实验室主办,并得到爱迩思出版社(ELSP)、ESBK国际学术交流中心、AC学术平台和西安工程大学等多所高校与机构的联合支持。来自国内外百余所知名高校、科研院所及企业的专家学者参与了本次盛会。

▲大会现场合照

|  |

▲会议现场

▲主会场主持人长安大学电子与控制工程学院左磊教授

本次会议聚焦机器人技术、自动化工程与智能交通系统的前沿发展,共设立主旨报告、特邀演讲及多场专题分论坛,吸引了广泛专家学者参加。开幕式上,长安大学电子与控制工程学院院长闫茂德教授致开幕辞,他强调了创新与发展、交流与合作的重要意义。

▲长安大学电子与控制工程学院院长闫茂德教授致开幕辞

会议邀请了多位在机器人、自动化与交通领域具有国际影响力的专家作主旨报告,包括:国家杰出青年科学基金获得者、国家级领军人才、西北工业大学黄攀峰教授;国家高层次人才、西安交通大学智能机器人研究院副院长、机器人与智能系统研究所副所长胡桥教授;全球前2%顶尖科学家、天津工业大学宗广灯教授;陕西省中青年科技创新领军人才、长安大学电子与控制工程学院副院长惠飞教授。专家们分享了他们最新的研究成果,为大会带来了前沿的学术视角。此外,陕西省青年英才、长安大学电控学院杨乐副教授也受邀作特邀报告。

|  |

|  |

主会场主旨报告

在下午的分会场报告中,来自多所高校和科研单位的学者分享了自己的最新研究进展。这些报告的发言,有见解,有特色,大家解放思想、畅所欲言,激荡出思想碰撞的火花,使会议充满了浓厚的研讨氛围。

|  |

|  |

|  |

▲分会场一现场图组

|  |

|  |

|  |

▲分会场二现场图组

第一届机器人、自动化和智能交通系统国际会议(RAITS 2026)已圆满闭幕。会议为全球机器人与智能交通领域的专家学者和科研人员提供了深度交流的平台,有效促进了理论探索、技术突破与产业应用的衔接。组委会表示,期待RAITS会议持续推动国际合作与知识共享,为构建更安全、高效、可持续的智能化社会贡献智慧与方案。